Introduction: The Governance Gap

Artificial intelligence and machine learning (AI/ML) are increasingly central to modern healthcare, powering advanced diagnostics, imaging interpretation, and clinical decision support tools. These systems promise improvements in accuracy, efficiency, and patient outcomes. However, their dynamic, adaptive nature presents a unique regulatory challenge. Most current oversight frameworks were built for static medical devices, not algorithms that evolve over time. As a result, a growing governance gap has emerged—defined by regulatory lag, fragmented oversight, and uncertainty regarding accountability and safety.

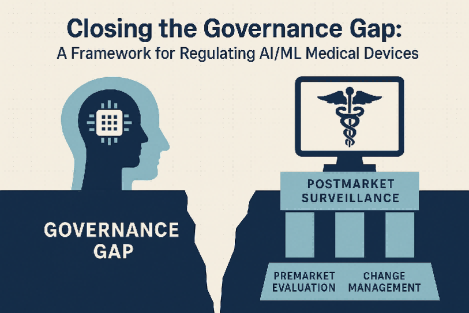

This gap creates a dilemma for regulators: either apply rigid controls that may hinder innovation or risk patient safety by allowing inadequately monitored systems to operate in clinical settings. A modernized regulatory approach is therefore essential—one that emphasizes adaptability, supports continuous oversight across the product lifecycle, and prioritizes transparency, harmonization, and public trust. Without such an approach, the full potential of AI in medicine may remain unrealized, or worse, be implemented in ways that do more harm than good.1,2,3

Current Regulatory Approaches: Strengths and Shortcomings

Regulatory agencies such as the U.S. Food and Drug Administration (FDA) and the European Union’s Medical Device Regulation (EU MDR) currently assess AI/ML medical software through existing mechanisms like 510(k) clearance, premarket approval, and standard risk classification. While these pathways have proven effective for conventional devices, they fall short in addressing the continuous learning and adaptation inherent to AI/ML systems.4,5

The FDA’s 2019 proposed framework for AI/ML-based Software as a Medical Device (SaMD) marked a crucial step forward. It introduced the concept of lifecycle-based regulation, including predetermined change control plans that outline how algorithms might evolve post-approval. However, ambiguity persists—particularly around what constitutes a “substantial change”—leaving manufacturers and regulators alike without clear guidance.6

Moreover, traditional postmarket surveillance is too slow and inflexible to manage AI-specific risks such as algorithmic bias, data drift, and performance variability across different patient populations. These limitations underscore the urgent need for regulatory innovation that can keep pace with the dynamic nature of AI in clinical care.7,8

Toward a General Governance Framework for AI/ML Medical Devices

To address these limitations, a comprehensive governance framework must be built around three pillars: rigorous premarket evaluation, adaptive postmarket surveillance, and transparent change management protocols. This approach must be tailored to the evolving nature of AI while safeguarding safety and effectiveness.

A lifecycle-based oversight strategy would treat AI devices not as static entities, but as systems that evolve continuously. This means allowing for algorithm updates under clear regulatory conditions, supported by predetermined change control plans that specify when and how updates can occur. These plans would reduce unnecessary delays while maintaining critical safety checks.

Postmarket performance monitoring would need to shift toward real-time surveillance, leveraging real-world evidence to evaluate accuracy, fairness, and clinical effectiveness. Tools like audit trails and continuous performance metrics would be essential in identifying emerging issues and enabling rapid response.

Transparency is also critical—not only for regulators but for clinicians and patients. Clear documentation of how an algorithm works, what data it was trained on, and how it makes decisions would help build trust and facilitate responsible use.

Finally, ethical principles must be embedded in every layer of the framework. This includes active mitigation of algorithmic bias, ensuring equitable performance across diverse patient groups, and protecting patient data privacy. Global harmonization through initiatives like the International Medical Device Regulators Forum (IMDRF) and its Good Machine Learning Practices (GMLP) can help align standards and foster cross-border collaboration.9,10,11

Conclusion: Governance for Innovation and Trust

AI/ML medical devices are transforming clinical practice, but without appropriate governance, their risks may outweigh their benefits. Bridging the regulatory gap is essential—not only to foster innovation but to protect patients and preserve public trust. A forward-looking governance framework must be adaptive, transparent, and grounded in ethical principles, with lifecycle oversight that reflects the dynamic nature of these technologies.

By aligning regulation with the realities of modern AI systems and encouraging global cooperation, the healthcare community can ensure that AI/ML devices are deployed safely, equitably, and effectively. Closing the governance gap is not just a regulatory imperative—it is a moral one, critical to the responsible future of digital health.

Reference:

Reddy S. Global Harmonization of Artificial Intelligence-Enabled Software as a Medical Device Regulation: Addressing Challenges and Unifying Standards. Mayo Clin Proc Digit Health. 2024 Dec 24;3(1):100191. doi: 10.1016/j.mcpdig.2024.100191. PMID: 40207007; PMCID: PMC11975980.

Schmidt, J., Schutte, N.M., Buttigieg, S. et al. Mapping the regulatory landscape for artificial intelligence in health within the European Union. npj Digit. Med. 7, 229 (2024). https://doi.org/10.1038/s41746-024-01221-6

Rincon N, Gerke S, Wagner JK. Implications of An Evolving Regulatory Landscape on the Development of AI and ML in Medicine. Pac Symp Biocomput. 2025;30:154-166. doi: 10.1142/9789819807024_0012. PMID: 39670368; PMCID: PMC11649012.

U.S. Food and Drug Administration. Artificial Intelligence and Machine Learning Software as a Medical Device (SaMD). Draft Guidance and Action Plan Documents. 2024–2025.

Wong ZS, et al. The illusion of safety: A report to the FDA on AI healthcare product regulation. NPJ Digit Med. 2024;7(1):112.

NAMSA. FDA’s Regulation of AI/ML SaMD. 2024.

RAPS. The global regulatory landscape for AI/ML-enabled medical devices. 2024.

Rieke N, et al. The future of digital health with federated learning. NPJ Digit Med. 2020;3:119.

Johner Institute. Regulatory requirements for medical devices with machine learning. 2025 Apr 15.

Kaissis GA, et al. Secure, privacy-preserving, and federated machine learning in medical imaging. Nat Mach Intell. 2020;2(6):305-311.

ScienceDirect. Global Harmonization of Artificial Intelligence-Enabled Software as a Medical Device (AI-SaMD). Comput Struct Biotechnol J. 2024;22:123-135.

Post comments